Start building apps for free

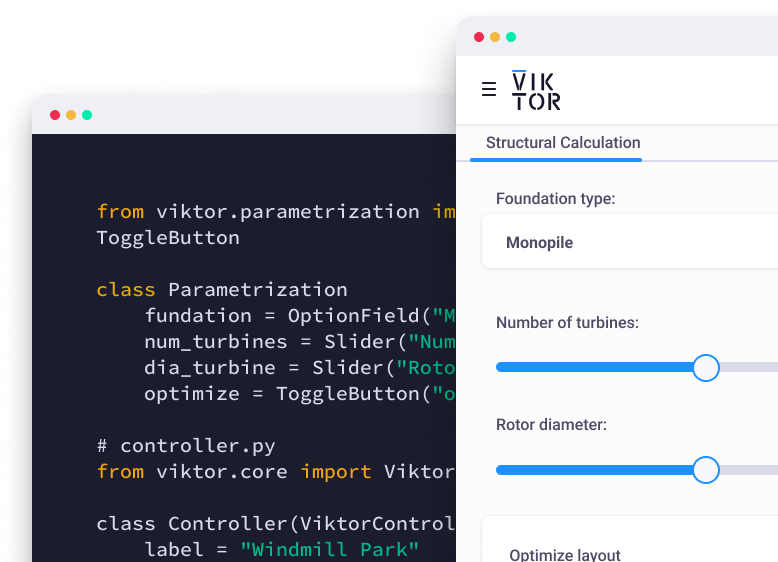

AI coding agents give beginners a strong start. You can describe an engineering tool in simple words and get a first version in minutes. VIKTOR offers the App Builder, which lets you create engineering automations in minutes using natural language, and you can even edit the code directly in your browser with help from the AI agent.

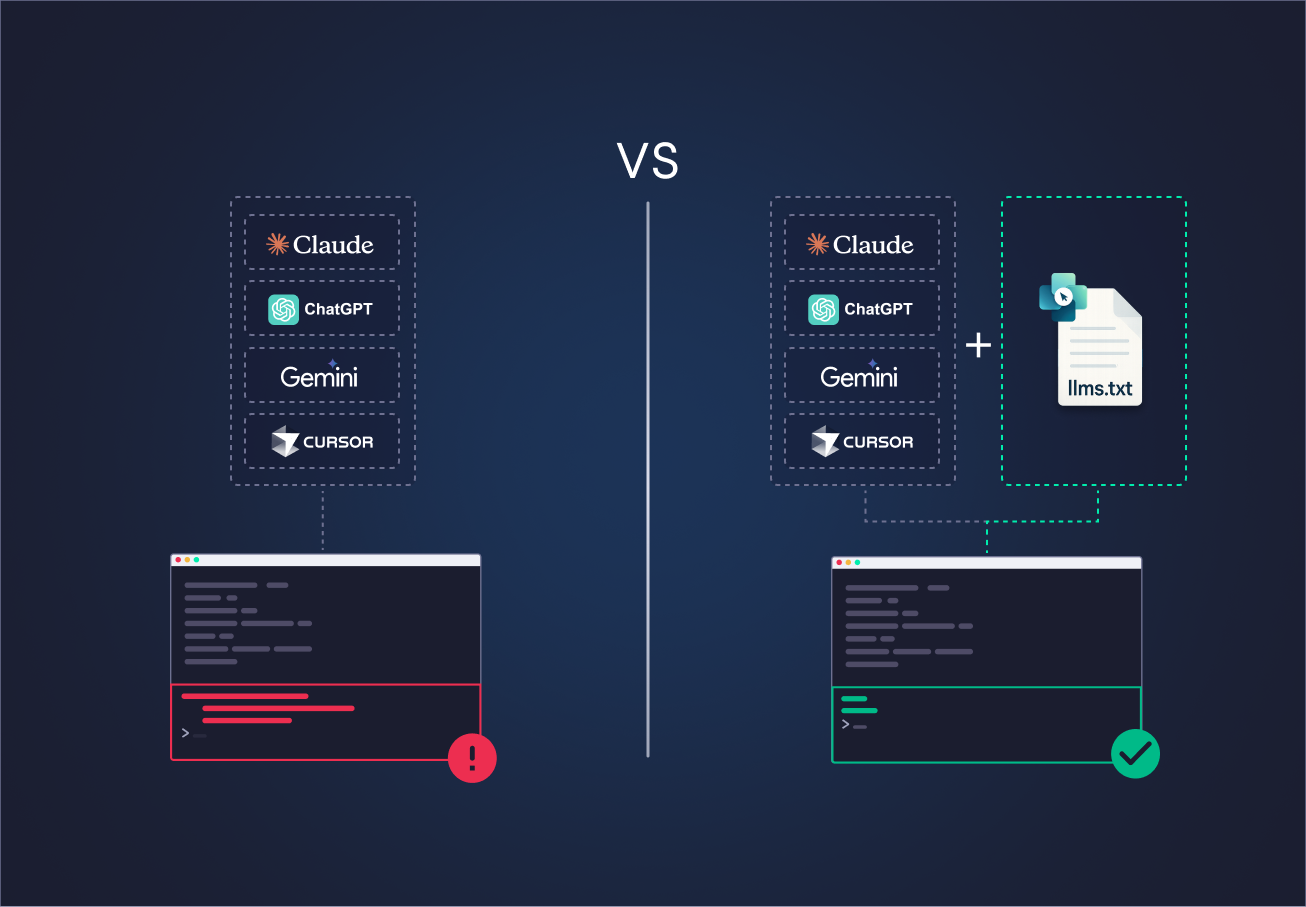

However, highly experienced users may prefer AI-first code editors, such as Windsurf, VS Code with Copilot, and Cursor, and LLM web clients such as ChatGPT, Claude, and Gemini. For these users, the llms.txt file provides clear VIKTOR SDK guidance in a single place, so you can keep your own tools and still get accurate code. It is another resource, together with the documentation and VIKTOR App Builder, that helps you build engineering tools faster and safer with VIKTOR."

In this blog, we will go from the basic definition of an llms.txt file to a practical example of how you can use it. We will cover:

-

What is an llms.txt file?

-

Key concepts

-

llms.txt files for VIKTOR

-

How to use llm.txt files with AI clients

-

What is an llms.txt file?

-

Key concepts

-

llms.txt files for VIKTOR

-

How to use llm.txt files with AI clients

What is an llms.txt file?

llms.txt files are plain text (markdown) files where you add extra guidance for an AI agent, like APIs, patterns, examples, and tips the model might not fully know. They can explain how to use a Python library or a feature the AI model was not trained on or does not fully know. This helps the agent write better code and work with tools outside its original training. With this guidance, development is faster and the AI becomes more useful.

In some cases, the llms.txt also provides URLs for the agent to fetch and get the context from, working like a navigation menu. In practice, each llms.txt file is located at a URL with the markdown content of the documentation. There is also a standard that sets guidelines for the content of these llms.txt files—you can check it after the blog.

Key concepts

Before we go deeper into using llms.txt files with agents, let’s first explain a few concepts so the later workflows feel clear.

Tokens: tiny chunks of text (whole words or parts) that the model turns into numbers so it can process language.

Context length: the max number of tokens the model can look at in one request (your prompt + llms.txt file + any tool output).

Agents: systems that plan steps and call tools to get stuff done with less hand‑holding.

Tools: external functions / APIs the agent can call when the model alone isn’t enough.

AI code editors: environments with built‑in model help (Copilot, Cursor, Windsurf) that inject code hints while you type.

AI clients: applications that let you use an LLM directly, such as ChatGPT, Claude, or Gemini.

Now let's see how this works in practice. First you share an llms.txt file URL with an AI tool (web client like ChatGPT / Claude or a code editor like Copilot / Cursor / Windsurf). A fetch tool pulls the file, the model reads that text together with your question or code and then gives you an answer or generates code that follows what the file explains. Short file = more room for your own prompt inside the context window, depending on the tool and model you select you may have more or less room for large context and that is when knowing about context length and tokens comes in handy (we will use that later).

llms.txt files for VIKTOR

VIKTOR provides two llms.txt files that condense the parts of the documentation an AI agent needs into short, clear notes. They list classes and functions from the SDK, show required and optional parameters, define expected inputs and outputs, and include small, correct examples. With this, an agent knows how to build common views and input blocks without making mistakes. For example, the files give enough detail to create a WebView, a PlotlyView, or a MapView, and wire them into a vkt.Parametrization, a vkt.Controller, and the tool’s layout.

-

https://docs.viktor.ai/llms.txt is the short version. It acts like an index, listing the main fields, views, and layout patterns. It also provides links to the full documentation when more detail is needed. Because it uses very few tokens, it fits well inside the context window of most AI agents, making it practical when speed and compactness are important.

-

https://docs.viktor.ai/llms-full.txt is the full version. It expands on the basics by including class and function signatures, the parameters they expect, and the typical inputs and outputs. It also contains small examples that show correct usage. This version uses more tokens, but in return it gives the agent everything it needs to generate complex applications without missing details.

-

Learning: If you're new to the platform you can ask: "How can I build a tool to plot the base pressure of a footing under axial loads?"

-

Modifying apps: My favorite one. Ask: "Move the last three elements of my

vkt.Parametrizationinto a newvkt.Stepand add aTableView." -

Debugging: Paste an error from the terminal and ask the AI to point you to the related class, block, or pattern.

How to use llms.txt files with AI clients

When using llms.txt files with an AI agent you typically start by sharing one of the llms.txt (or llms-full.txt) URLs, then let it load the guidance, ask for a first draft of the feature or tool, run it and see what works or breaks, and finally iterate with focused prompts (fix errors, extend features, refactor). This keeps the model anchored in vetted docs, reduces hallucinated APIs, and gives you faster, safer iterations. We’ll show it in three styles: an editor agent (Copilot in VS Code), a chat client (ChatGPT), and AI‑first editors (Windsurf / Cursor).

Visual Studio Code (Copilot)

VS Code is one of the most used code editors in the world and its usage keeps growing thanks to new AI features. One of them is Copilot Chat. Copilot acts like a coding agent that can look at your repository and answer in context.

You can set up Copilot with this guide by linking your GitHub account. There is a free plan and a pro plan so you can try it first without cost.

Copilot Chat lets you: ask questions about your codebase, request edits, and run agent tasks using the agent mode. Agent mode works best with an llms.txt file because it has tools that can pull the llms.txt file from its URL and load the content before generating code.

Let's first try to create an application without passing the llm.txt file. We will use the following prompt for testing and GPT-5 as our LLM.

1 2Task: build a VIKTOR application that sizes a pressure pipe using Hazen Williams, takes design flow, pipe length, roughness coefficient, local loss coefficient, and elevation difference, computes head loss and velocity, generates an iteration table for a list of standard diameters with pass or fail against limits for velocity and total head loss. 3

The results are not great. GPT-5 did a good job creating the inputs and the engineering logic, but it failed to create a VIKTOR view. It used the vkt.View method and vkt.HTMLResult instead of vkt.WebView.

Now we can test an llms.txt file in action using different models with Copilot Chat. In this case, we will use Claude Sonnet 4, and we will create an engineering tool that allows you to size a pipe diameter using the Hazen–Williams equation and some hydraulic parameters.

1 2fetch: https://docs.viktor.ai/llms-full.txt Task: build a VIKTOR application that sizes a pressure pipe using Hazen Williams, takes design flow, pipe length, roughness coefficient, local loss coefficient, and elevation difference, computes head loss and velocity, and generates an iteration table for a list of standard diameters with pass or fail against limits for velocity and total head loss. 3

The VS Code agent plans the steps. First, it fetches one of the VIKTOR llms.txt files. Then it creates the parametrization, builds the controller logic, and reviews the generated code.

We quickly get the core application logic working. The model correctly generates the inputs using Sections and shows the results in a TableView.

This was a tough test. Although the model nailed the TableView and core logic, it produced a DataView with a few errors. That is fine, we just copy the traceback from the terminal and let Copilot fix the code.

Even with no errors we can ask for more. For example, we want the TableView rows red when a diameter fails and green when it passes. Prompt: Now add a color to the rows that failed and green to the rows that passed.

ChatGPT

A similar workflow can be done with any popular LLM client like ChatGPT, Gemini, or Claude. Let’s build the same application but now using gpt-5-thinking instead of Sonnet 4 in the ChatGPT desktop app.

To use the thinking model, you need the Plus or Premium plan, but you can get close results with the regular GPT-5 model.

The results are similar. GPT-5 thinking added the TableView colors on the first try. With Sonnet 4 we had to prompt again, but Sonnet included a DataView summary. In the end performance is close; the only drawback is not having everything inside the editor.

Cursor / Windsurf (AI-first code editors)

Another popular option is to use an AI‑first code editor. The most common ones right now are Cursor and Windsurf. Since they are based on Visual Studio Code, they feel familiar to set up and the UI is similar. They keep adding features and have generous free tiers, and you can also use the VIKTOR llms.txt files in them.

Conclusion

In this blog, we saw how llms.txt files can change the way engineers and AEC developers approach automation. Instead of losing hours in complex documentation, you can use llms.txt files with AI tools and low-code platforms like VIKTOR to build faster, safer, and more reliable engineering tools.

If you are still learning and not ready to jump straight into an AI code editor, you can start by describing the app you need in plain language. The VIKTOR App Builder will create a first version with inputs, layout, and starter logic in just a few minutes. You can check the following blog to get started: AI Builder.

Developing tools for engineering has never been simpler. Create your free VIKTOR account now and enhance your projects!